import math

import matplotlib.pyplot as plt

from numpy import c_, r_

from numpy.core import *

from numpy.linalg import norm

from numpy.matlib import mat

from numpy.random import rand

from scipy.optimize import fmin_cg, minimize

from scipy.special import expit, logit

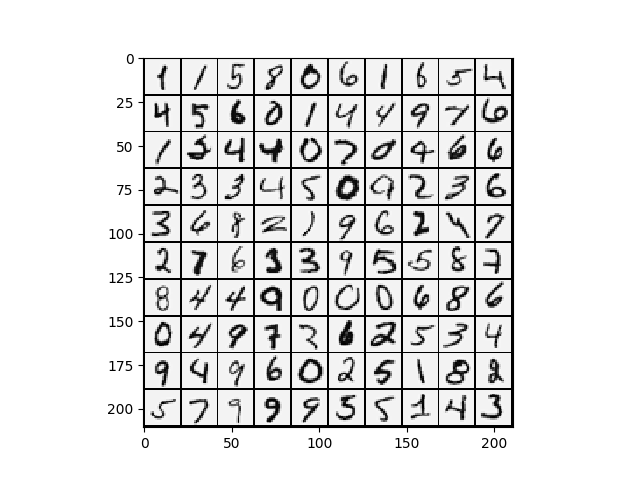

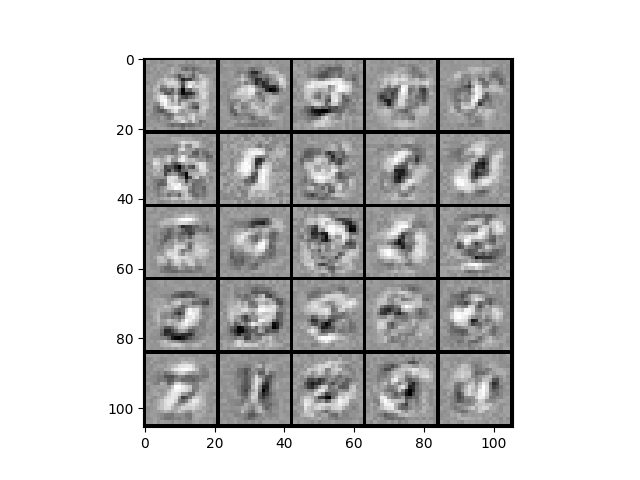

def displayData(X: ndarray, e_width=0):

if e_width == 0:

e_width = int(round(math.sqrt(X.shape[1])))

m, n = X.shape

e_height = int(n / e_width)

pad = 1

d_rows = math.floor(math.sqrt(m))

d_cols = math.ceil(m / d_rows)

d_array = mat(

ones((pad + d_rows * (e_height + pad),

pad + d_cols * (e_width + pad))))

curr_ex = 0

for j in range(d_rows):

for i in range(d_cols):

if curr_ex > m:

break

max_val = max(abs(X[curr_ex, :]))

d_array[pad + j * (e_height + pad) + 0:pad + j *

(e_height + pad) + e_height,

pad + i * (e_width + pad) + 0:pad + i *

(e_width + pad) + e_width] = \

X[curr_ex, :].reshape(e_height, e_width) / max_val

curr_ex += 1

if curr_ex > m:

break

plt.imshow(d_array.T, cmap='Greys')

def sigmoidGradient(z: ndarray)->ndarray:

g = zeros(shape(z))

g = expit(z)*(1-expit(z))

return g

def costFuc(nn_params: ndarray,

input_layer_size, hidden_layer_size, num_labels,

X: ndarray, Y: ndarray, lamda: float):

Theta1 = nn_params[: hidden_layer_size * (input_layer_size + 1)] \

.reshape(hidden_layer_size, input_layer_size + 1)

Theta2 = nn_params[hidden_layer_size * (input_layer_size + 1):] \

.reshape(num_labels, hidden_layer_size + 1)

m = size(X, 0)

temp1 = power(Theta1[:, 1:], 2)

temp2 = power(Theta2[:, 1:], 2)

h = expit(c_[ones((m, 1), float), expit(

(c_[ones((m, 1), float), X] @ Theta1.T))] @ Theta2.T)

J = sum(-Y * log(h) - (1 - Y) * log(1 - h)) / m\

+ lamda*(sum(temp1)+sum(temp2))/(2*m)

return J

def gradFuc(nn_params: ndarray,

input_layer_size, hidden_layer_size, num_labels,

X: ndarray, Y: ndarray, lamda: float):

Theta1 = nn_params[: hidden_layer_size * (input_layer_size + 1)] \

.reshape(hidden_layer_size, input_layer_size + 1)

Theta2 = nn_params[hidden_layer_size * (input_layer_size + 1):] \

.reshape(num_labels, hidden_layer_size + 1)

m = size(X, 0)

Theta1_grad = zeros(shape(Theta1))

Theta2_grad = zeros(shape(Theta2))

a_1 = c_[ones((m, 1), float), X]

z_2 = a_1@ Theta1.T

a_2 = c_[ones((m, 1), float), expit(z_2)]

a_3 = expit(a_2@Theta2.T)

err_3 = a_3-Y

err_2 = (err_3@Theta2)[:, 1:]*sigmoidGradient(z_2)

temptheta2 = c_[zeros((size(Theta2, 0), 1)), Theta2[:, 1:]]

temptheta1 = c_[zeros((size(Theta1, 0), 1)), Theta1[:, 1:]]

Theta2_grad = (err_3.T@a_2+lamda*temptheta2)/m

Theta1_grad = (err_2.T@a_1+lamda*temptheta1)/m

grad = r_[Theta1_grad.reshape(-1, 1), Theta2_grad.reshape(-1, 1)]

return grad.flatten()

def nnCostFunction(nn_params: ndarray,

input_layer_size, hidden_layer_size, num_labels,

X: ndarray, y: ndarray, lamda: float):

Theta1 = nn_params[: hidden_layer_size * (input_layer_size + 1), :] \

.reshape(hidden_layer_size, input_layer_size + 1)

Theta2 = nn_params[hidden_layer_size * (input_layer_size + 1):] \

.reshape(num_labels, hidden_layer_size + 1)

m = size(X, 0)

J = 0

Theta1_grad = zeros(shape(Theta1))

Theta2_grad = zeros(shape(Theta2))

Y = zeros((m, num_labels))

for i in range(m):

Y[i, y[i, :] - 1] = 1

temp1 = zeros((size(Theta1, 0), size(Theta1, 1)-1))

temp2 = zeros((size(Theta2, 0), size(Theta2, 1)-1))

temp1 = power(Theta1[:, 1:], 2)

temp2 = power(Theta2[:, 1:], 2)

a_1 = c_[ones((m, 1), float), X]

z_2 = a_1@ Theta1.T

a_2 = c_[ones((m, 1), float), expit(z_2)]

a_3 = expit(a_2@Theta2.T)

J = sum(-Y * log(a_3) - (1 - Y) * log(1 - a_3)) / m\

+ lamda*(sum(temp1)+sum(temp2))/(2*m)

err_3 = a_3-Y

err_2 = (err_3@Theta2)[:, 1:]*sigmoidGradient(z_2)

delta_2 = err_3.T@a_2

delta_1 = err_2.T@a_1

temptheta2 = c_[zeros((size(Theta2, 0), 1)), Theta2[:, 1:]]

temptheta1 = c_[zeros((size(Theta1, 0), 1)), Theta1[:, 1:]]

Theta2_grad = (delta_2+lamda*temptheta2)/m

Theta1_grad = (delta_1+lamda*temptheta1)/m

grad = r_[Theta1_grad.reshape(-1, 1), Theta2_grad.reshape(-1, 1)]

return J, grad

def randInitializeWeights(L_in: int, L_out: int)->ndarray:

W = zeros((L_out, 1 + L_in))

epsilon_init = 0.12

W = rand(L_out, L_in+1)*2*epsilon_init-epsilon_init

return W

def debugInitializeWeights(fan_out, fan_in):

W = zeros((fan_out, 1 + fan_in))

W = sin(arange(1, size(W)+1).reshape(fan_out, 1 + fan_in)) / 10

return W

def computeNumericalGradient(J, theta: ndarray):

numgrad = zeros(shape(theta))

perturb = zeros(shape(theta))

e = 1e-4

for p in range(size(theta)):

perturb[p, :] = e

loss1 = J(theta - perturb)[0]

loss2 = J(theta + perturb)[0]

numgrad[p, :] = (loss2 - loss1) / (2*e)

perturb[p, :] = 0

return numgrad

def checkNNGradients(lamda=0):

input_layer_size = 3

hidden_layer_size = 5

num_labels = 3

m = 5

Theta1 = debugInitializeWeights(hidden_layer_size, input_layer_size)

Theta2 = debugInitializeWeights(num_labels, hidden_layer_size)

X = debugInitializeWeights(m, input_layer_size - 1)

y = 1 + mod(arange(1, m+1), num_labels).reshape(-1, 1)

nn_params = r_[Theta1.reshape(-1, 1), Theta2.reshape(-1, 1)]

def costFunc(p): return nnCostFunction(p, input_layer_size,

hidden_layer_size,

num_labels, X, y, lamda)

[cost, grad] = costFunc(nn_params)

numgrad = computeNumericalGradient(costFunc, nn_params)

print(numgrad, '\n', grad)

print('The above two columns you get should be very similar.\n',

'(Left-Your Numerical Gradient, Right-Analytical Gradient)', sep='')

diff = norm(numgrad-grad)/norm(numgrad+grad)

print('If your backpropagation implementation is correct, then \n',

'the relative difference will be small (less than 1e-9). \n',

'\nRelative Difference: {}'.format(diff), sep='')

def predict(Theta1: ndarray, Theta2: ndarray, X: ndarray)->ndarray:

m = size(X, 0)

num_labels = size(Theta2, 0)

h = expit(c_[ones((m, 1), float), expit(

(c_[ones((m, 1), float), X] @ Theta1.T))] @ Theta2.T)

p = argmax(h, 1).reshape(-1, 1)+1

return p

|